Treat Infrastructure as Code development like a software engineering project. In this article we are building on top of the post “Test Infrastructure as Code”. We are looking into and digging a little deeper on how to unit test Infrastructure as Code.

Generally Infrastructure as Code (IaC) can be distinguished between two approaches. Declarative and Imperative, this article will focus on the declarative approach and how to test it.

Declarative

When following the best practices for Infrastructure as Code by using a declarative approach to provision resources the unit is the configuration file, or Azure Resource Manager (ARM) template, which is a JSON file.

Imperative

The imperative approach on the other hand like @pascalnaber describes it in his wonderful blog post stop using ARM templates! Use the Azure CLI instead requires that you actual test the function or script you are using to provision resoruces.

Unit Tests

The foundation of your test suite will be made up of unit tests. Your unit tests make sure that a certain unit (your subject under test) of your codebase works as intended. Unit tests have the narrowest scope of all the tests in your test suite. The number of unit tests in your test suite will largely outnumber any other type of test. - The Practical Test Pyramid

Having an Azure Resource Manager Template (ARM Template) as the subject under test we are looking for a way to test a JSON configuration file. I have not yet heard of a Unit Testing framework for configuration files like YAML or JSON. The only tool I am aware of are linter for these file types. The approach of a linter gives us a good starting point to dig deeper into static analysis of code.

As the configuration file usually describes the desired state of the system to be deployed. The specified system consists generally of one ore more Azure resources that needs to be provisioned. Each resource in an Azure Resource Manager template adheres to a specific schema. The schema describes the resources properties that needs to be passed to be able to be deployed. It also indicates mandatory and optional values. The human readable form of the schema can be found in the Azure Template Reference.

Taking the automation account as an example the Azure Resource Manager Template resource implementation looks like this.

{

"name": "string",

"type": "Microsoft.Automation/automationAccounts",

"apiVersion": "2015-10-31",

"properties": {

"sku": {

"name": "string",

"family": "string",

"capacity": "integer"

}

},

"location": "string",

"tags": {}

}

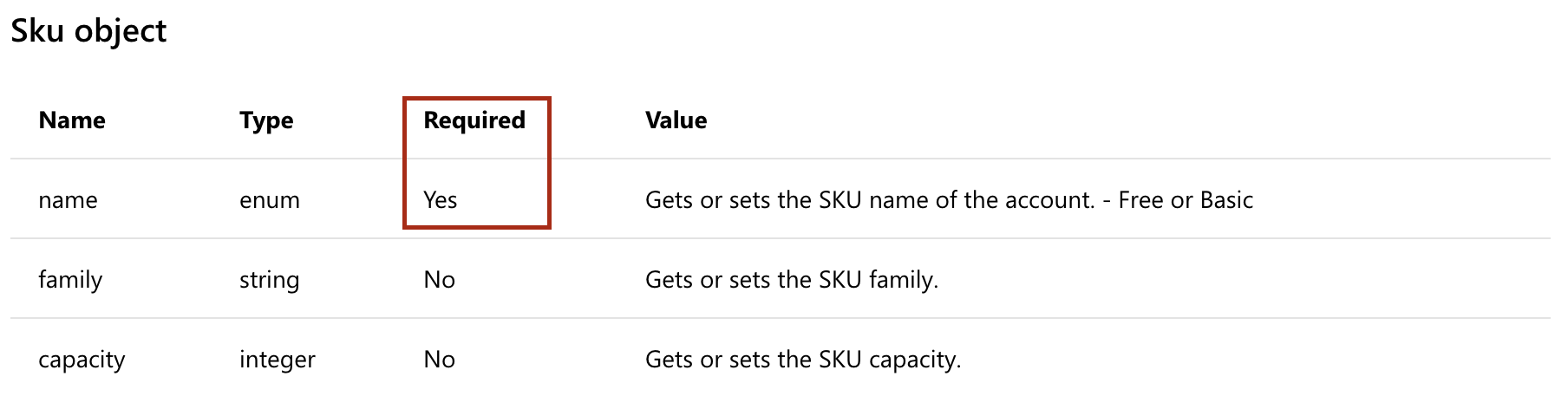

Inside the properties property only the SKU property needs to be configured.

The SKU object only expects to have a name as a required parameter.

A quick way to test could therefore be validating the schema and ensuring all mandatory parameters are set.

Windows 10 ships with a pre installed testing framework for PowerShell called Pester. Pester is the ubiquitous test and mock framework for PowerShell. I recommend getting the latest version from the PSGallery

Install-Module -Name Pester -Scope CurrentUser -Force

Static Code Analysis

I personally refer to a unit tests for ARM templates as asserted static code analysis. By using assertion the test should parse, validate and check for best practices within the given configuration file (ARM template).

I know of two public available static code analysis tests, one is implemented by the Azure Virtual Datacenter (VDC) and one in Az.Test.

General Approach

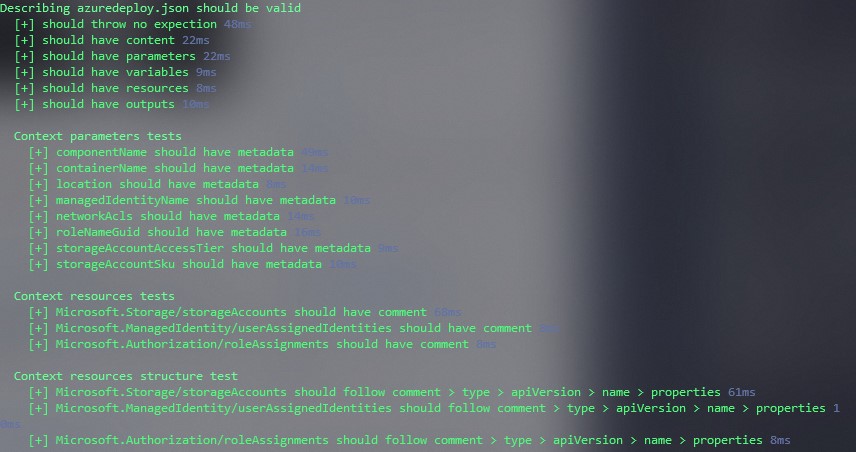

We want to ensure we have consistency and best practices checked when testing our ARM templates. The output should be human readable and easy to understand.

The tests should be reusable and should be applicable to all ARM templates.

This script is a good base-line to add own best practices and checks.

We can add the support for multiple ARM templates that are located in a given path by wrapping the script into a foreach loop.

To reuse it we can rename azuredeploy.Tests.ps1 to azuredeploy.spec.ps1.

Spec as a means to describing that this is a specification that is going to be validated.

If you wish to create script files manually with different conventions, that’s fine, but all Pester test scripts must end with .Tests.ps1 in order for Invoke‐Pester to run them. See Creating a Pester Test

As Pester will pick up every *.Tests.ps1 we want the specification to not be triggered, rather our loop through all resource manager templates should invoke our specification and validate.

Hence we are going to create a new file with the name azuredeploy.Tests.ps1 which will invoke our azuredeploy.spec.ps1 with a given ARM template.

This can of course be merged and adjusted as this approach is very opinionated.

However using this approach will enable you to extend the checks dynamically by changing or adding more *.spec.ps1 files.

#azuredeploy.Tests.ps1

param (

$Path = $PSScriptRoot # Assuming the test is located in the template folder

)

# Find all Azure Resource Manager Templates in a given Path

$TemplatePath = Get-ChildItem -Path "$Path" -include "*azuredeploy.json" -Recurse

# Loop through all templates found

foreach ($Template in $TemplatePath) {

# I would recommend to add any kind of validation at this point to ensure we actually found ARM templates.

$Path = $Template.FullName

# invoke our former `azuredeploy.Tests.ps1` script with the found template.

# this could be wrapped into a loop of all *.spec.ps1 files, similar to the ARM template loop.

. "$PSScriptRoot/azuredeploy.spec.ps1" -Path $Path

}

Now we can run this tests given multiple ARM templates, ensuring we have consistency and best practices checks in place.

We can add more checks to our azuredeploy.spec.ps1.

Eventually we could add support for multiple spec files, by adding a loop that invokes all *.spec.ps1 files.

For starters we created a flexible baseline that ensures a set of best practice and mandatory properties are tested.

Next up we are going to have a look at how to extend on this approach and test a specific ARM template implementation.

Az.Test

The Az.Test module tries to take this approach and create a module that solely focuses on implementing and providing opinionated spec files that are wrapped into usable functions.

Test-xAzStatic -TemplateUri $TemplateUri

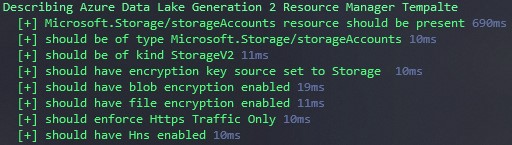

Resource Specific Static Analysis for Example Azure Data Lake Gen 2 implementation

Lets take an example of statically analyzing a given ARM template. We want to ensure that requirements are implemented as specified.

The (assumed) requirements for this case are:

- Provision an Azure Data Lake Storage Account Generation 2

- Ensure encryption is enforced at rest

- Ensure encryption is enforced in transit

- Allow application teams to define a set of geo replication settings

- Allow applications teams to specific access availability

- Allow a set of dynamically created network access control lists (ACLs) to be processed

Note The dynamic ACLs deserves further deep dive, for now assume a valid ACL object is passed to the deployment

Get the code azuredeploy.json.

//azuredeploy.json

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"resourceName": {

"type": "string",

"metadata": {

"description": "Name of the Data Lake Storage Account"

}

},

"location": {

"type": "string",

"defaultValue": "[resourceGroup().location]",

"metadata": {

"description": "Azure location for deployment"

}

},

"storageAccountSku": {

"type": "string",

"defaultValue": "Standard_ZRS",

"allowedValues": [

"Standard_LRS",

"Standard_GRS",

"Standard_RAGRS",

"Standard_ZRS",

"Standard_GZRS",

"Standard_RAGZRS"

],

"metadata": {

"description": "Optional. Storage Account Sku Name."

}

},

"storageAccountAccessTier": {

"type": "string",

"defaultValue": "Hot",

"allowedValues": ["Hot", "Cool"],

"metadata": {

"description": "Optional. Storage Account Access Tier."

}

},

"networkAcls": {

"type": "string",

"metadata": {

"description": "Optional. Networks ACLs Object, this value contains IPs to whitelist and/or Subnet information."

}

}

},

"variables": {},

"resources": [

{

"comments": "Azure Data Lake Gen 2 Storage Account",

"type": "Microsoft.Storage/storageAccounts",

"apiVersion": "2019-04-01",

"name": "[parameters('resourceName')]",

"sku": {

"name": "[parameters('storageAccountSku')]"

},

"kind": "StorageV2",

"location": "[parameters('location')]",

"tags": {},

"identity": {

"type": "SystemAssigned"

},

"properties": {

"encryption": {

"services": {

"blob": {

"enabled": true

},

"file": {

"enabled": true

}

},

"keySource": "Microsoft.Storage"

},

"isHnsEnabled": true,

"networkAcls": "[json(parameters('networkAcls'))]",

"accessTier": "[parameters('storageAccountAccessTier')]",

"supportsHttpsTrafficOnly": true

},

"resources": [

{

"comments": "Deploy advanced thread protection to storage account",

"type": "providers/advancedThreatProtectionSettings",

"apiVersion": "2017-08-01-preview",

"name": "Microsoft.Security/current",

"dependsOn": [

"[resourceId('Microsoft.Storage/storageAccounts/', parameters('resourceName'))]"

],

"properties": {

"isEnabled": true

}

}

]

}

],

"outputs": {

"resourceID": {

"type": "string",

"value": "[resourceId('Microsoft.DataLakeStore/accounts', parameters('resourceName'))]"

},

"componentName": {

"type": "string",

"value": "[parameters('resourceName')]"

}

}

}

Now, all requirements should be implemented. Based on the requirement specification we can implement tests. We will leverage PowerShells native JSON capabilities to validate the requirements.

We can assert based on our implementation if the requirement’s are implemented. Also, we want to ensure that our tests are written in a way that the output is as human readable and close to the requirements as possible.

First, we accept a given ARM template path - by default we will assume the template is called azuredeploy.json and that it is located in the same directory as the test. Otherwise a path to an ARM template can be passed via the -Path parameter.

We are testing the presence of the template, validate that we can read it and convert it from a JSON string to a PowerShell object.

Having the JSON file as an object we can query its properties naively for a resource that matches the type Microsoft.Storage/storageAccounts.

For readability we are storing the resources configuration in $resource.

Now we tested our implementation by validating within our tests that the requirements are present in our configuration file. If changes to the ARM template happen we can still ensure our requirements are met if all tests are passed. The output of these testes are written in a way that it is human readable and can be interpreted by non-technical people.

Having this kind of validation in place, we can ensure the specified requirements are configured and in place at development time. To validate that the requirements are deployed and correctly configured we should write Acceptance Tests. These tests should be triggered after the deployment of the actual resource. Acceptance Tests follow the same procedure but will assert on the object returned from the Azure Resource Manager.

VDC implementation

See VDC code blocks module.tests.ps1 for the full implementation of the engine.

VDC module tests asserts that the converted JSON has expected properties in place.

The basic Azure Resource Manager template schema describes $schema, contentVersion, parameters, variables, resources and outputs as top level properties.

Here is the basic schema as an example.

// azuredeploy.json

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {},

"variables": {},

"resources": [],

"outputs": {}

}

PowerShell has native support for working with JSON files. We can easily read and convert a JSON file into a PowerShell object. If the conversion is not possible or the JSON is invalid, a terminating error is thrown.

The validity of JSON could be the first test.

$TemplateFile = './azuredeploy.json'

$TemplateJSON = Get-Content $TemplateFile -Raw | ConvertFrom-Json

$TemplateJSON

# $schema : https://schema....

# contentVersion : 1.0.0.0

# parameters :

# variables :

# resources : {}

# outputs :

Get-Content -Raw will read the content of a file as one string rather then an array of strings per line.

ConvertFrom-Json converts the passed string into a PowerShell object.

#vdc/Modules/SQLDatabase/2.0/Tests/module.tests.ps1

# $TemplateFileTestCases can store multiple TemplateFileTestCases to test

It "Converts from JSON and has the expected properties" `

-TestCases $TemplateFileTestCases {

# Accept a template file per time

Param ($TemplateFile)

# Define all expected properties as pet ARM schema

$expectedProperties = '$schema',

'contentVersion',

'parameters',

'variables',

'resources',

'outputs'| Sort-Object

# Get actual properties from the TemplateFile

$templateProperties =

(Get-Content (Join-Path "$here" "$TemplateFile") |

ConvertFrom-Json -ErrorAction SilentlyContinue) |

Get-Member -MemberType NoteProperty |

Sort-Object -Property Name |

ForEach-Object Name

# Assert that the template properties are present

# PowerShell will compare strings here, as toString() is invoked on the array of Names

$templateProperties | Should Be $expectedProperties

}

The tests Asserts that the $expectedProperties are present within the JSON file by getting the NoteProperties Name of the converted PowerShell object.

The Noteproperties of a blank ARM template look like this:

$TemplateJSON | Get-Member -MemberType NoteProperty

# TypeName: System.Management.Automation.PSCustomObject

# Name MemberType Definition

# ---- ---------- ----------

# $schema NoteProperty string $schema=https://...

# contentVersion NoteProperty string contentVersion=1.0.0.0

# outputs NoteProperty PSCustomObject outputs=

# parameters NoteProperty PSCustomObject parameters=

# resources NoteProperty Object[] resources=System.Object[]

# variables NoteProperty PSCustomObject variables=

$TemplateJSON | Get-Member -MemberType NoteProperty | select Name

# Name

# ----

# $schema

# contentVersion

# outputs

# parameters

# resources

# variables

Using the Name property returned from the Get-Member function, we can assert that all expected JSON properties are present.

The same test can be applied for the parameter files, too.

The module.tests.ps1 then parses the given template and checks if the required parameters in a given parameter file are present.

A required parameter can be identified by the missing defaultValue property.

Given the following parameters property of an ARM template, we could check, if the mandatory parameter is present in the parameters file.

As the mandatory parameter doesn’t specify the defaultValue the ARM template expects a parameter to be passed.

// azuredeploy.json

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"name": {

"type": "string",

"defaultValue": "Mark"

},

"mandatory": {

"type": "string"

}

} // ...

}

Using PowerShell, we can search for the property that is missing defaultValue.

Leveraging -FilterScript we can ensure that the property Name of a given PSObject is defaultValue.

Where-Object -FilterScript { -not ($_.Value.PSObject.Properties.Name -eq "defaultValue") }

We can check the presence of PSObjects Value properties defaultValue.

To explain and visualize what is happening the PsObject output is displayed below.

$TemplateFile = './azuredeploy.json'

$TemplateJSON = Get-Content $TemplateFile -Raw | ConvertFrom-Json

$TemplateJSON.Parameters.PSObject.Properties

# Value : @{type=string; defaultValue=Mark} # <----- Inside Value we find the object, by looking for the Property with Name defaultValue

# MemberType : NoteProperty

# IsSettable : True

# IsGettable : True

# TypeNameOfValue : System.Management.Automation.PSCustomObject

# Name : name

# IsInstance : True

# Value : @{type=string} # <----- The Property with Name defaultValue is missing here!

# MemberType : NoteProperty

# IsSettable : True

# IsGettable : True

# TypeNameOfValue : System.Management.Automation.PSCustomObject

# Name : mandatory

# IsInstance : True

$TemplateJSON.Parameters.PSObject.Properties |

Where-Object -FilterScript {

-not ($_.Value.PSObject.Properties.Name -eq "defaultValue")

}

# Value : @{type=string}

# MemberType : NoteProperty

# IsSettable : True

# IsGettable : True

# TypeNameOfValue : System.Management.Automation.PSCustomObject

# Name : mandatory

# IsInstance : True

Using the same idea we can then check if the returned values are present in the parameter file. By reading the file and getting again the Name of the Parameter.

$requiredParametersInTemplateFile = (Get-Content (Join-Path "$here" "$($Module.Template)") |

ConvertFrom-Json -ErrorAction SilentlyContinue).Parameters.PSObject.Properties |

Where-Object -FilterScript { -not ($_.Value.PSObject.Properties.Name -eq | "defaultValue") }

Sort-Object -Property Name |

ForEach-Object Name

$allParametersInParametersFile = (Get-Content (Join-Path "$here" "$($Module.Parameters)") |

ConvertFrom-Json -ErrorAction SilentlyContinue).Parameters.PSObject.Properties |

Sort-Object -Property Name |

ForEach-Object Name

$allParametersInParametersFile | Should Contain $requiredParametersInTemplateFile

Imperative Considerations

Going the imperative approach the subject under test might vary and depends on the implementation.

A unit tests should execute quick, as the Az PowerShell Module is communicating with Azure this would violate the Unit Testing definition.

Hence you want to Mock all Az scripts and tests the flow of you implementation.

The Assert-MockCalled ensures the flow of you code is as expected.

Generally we trust that the provided commands are thoroughly tested by Microsoft.

A unit test for a deployment script can leverage PowerShells WhatIf functionally as a way of preventing actual execution to Azure too.

New-AzResourceGroupDeployment -ResourceGroupName $rg -TemplateFile $tf -TemplateParameterFile $tpf -WhatIf

A deployment script should implement the ShouldProcess functionality of PowerShell.

[CmdletBinding(SupportsShouldProcess=$True)]

# ...

if ($PSCmdlet.ShouldProcess("ResourceGroupName $rg deployment of", "TemplateFile $tf")) {

New-AzResourceGroupDeployment -ResourceGroupName $rg -TemplateFile $tf -TemplateParameterFile $tpf

}

This ensures the script can be executed using the -WhatIf switch to execute a dry run of the code.